CAPTCHA Is Us: The Invisible Labor Behind Human Verification

We analyze how verification systems serve not just security, but transform users into a workforce for training artificial intelligence. An exploration of the delicate balance between data protection, user experience, and the undeclared value of our digital interactions.

CAPTCHA Is Us

Every day, millions of times a day, the global digital flow is interrupted for a fraction of a second. Before a login screen or a contact form, we are asked to identify traffic lights, crosswalks, fire hydrants, or grainy bicycles. What we perceive as a trivial security measure is actually one of the largest distributed collective labor operations ever realized. At GoBooksy, working daily on data flow architecture and user interaction, we constantly observe how this mechanism is not merely an entry gate, but an often unbalanced exchange of value. We are not just proving we are human; we are lending our cognitive capacity to teach machines to see the world as we do.

The operational reality we encounter in managing web infrastructures shows us that the classic concept of the Turing test has been completely inverted. Initially, these tests served to digitize books that optical recognition systems could not read. Every time a user deciphered a distorted word, they were literally saving a piece of an analog archive. Today, the nature of the images presented to us has changed radically because the needs of artificial intelligences requiring training have changed. When we select all the squares containing a bus, we are refining autonomous driving algorithms, teaching vehicles to distinguish urban obstacles with the precision that only the human eye still possesses in certain conditions of visual ambiguity.

This dynamic introduces significant friction into the user experience, a problem we often face when designing interfaces for our clients. There is a subtle but tangible breaking point: when security becomes too invasive, the user abandons navigation. We have noticed that aggressive implementation of visual challenges drastically reduces conversion rates, creating frustration for those simply seeking access to a legitimate service. The paradox is that bots, the automated software these systems are supposed to block, have become so sophisticated that they solve visual puzzles with speed and accuracy often superior to humans. Consequently, to maintain effectiveness, tests have become progressively harder for real people, offering images so confused or culturally specific as to be incomprehensible.

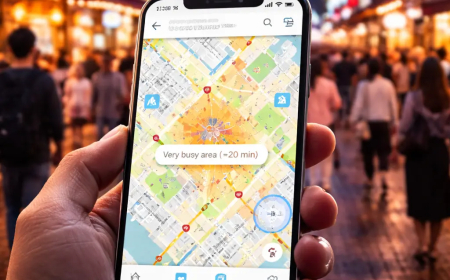

Technological evolution is fortunately shifting the axis towards invisible verification, based not on what the user can solve, but on how they behave. In the systems we monitor and integrate into the GoBooksy ecosystem, we increasingly prioritize behavioral biometric analysis. This approach evaluates mouse movement, cursor acceleration, reaction times, and even the micro-hesitations that are intrinsically human and extremely difficult for a scripted algorithm to replicate. We no longer ask the user to work for the system; we let the system understand the user's nature through the analysis of navigation patterns. While this improves usability by eliminating interruptions, it also opens deep questions about privacy and constant tracking, as verification becomes a continuous and silent process rather than a single hurdle to overcome.

It remains fundamental to understand that total security on the web is an illusion, and risk management must be balanced. We often intervene to recalibrate defensive systems that, in an attempt to exclude every possible automated threat, end up excluding segments of legitimate users, perhaps those using assistive technologies or browsing from shared connections with mixed reputations. The technical challenge is not to erect higher walls, but to build smarter filters that can distinguish malicious intent from genuine human behavior, even when the latter is imperfect or unpredictable.

The future of online identity verification is moving away from the request for explicit cognitive proofs towards a model of reputational trust. In this scenario, GoBooksy continues to observe and adapt its technologies, aware that every click on an image, or every second of mouse hesitation, is valuable data. We have moved from being simple users to becoming, unwittingly, the final validators of reality for the machines we are building. The next time the system asks us to confirm our humanity, we will know it is not just checking who we are, but learning from us how to be a little more human itself.