How much data do we create?

We analyze the dizzying growth of global data volumes, exploring what it really means to generate 2.5 quintillion bytes a day. A deep dive into infrastructure, hidden costs, and critical information management in the era of digital hyper-production.

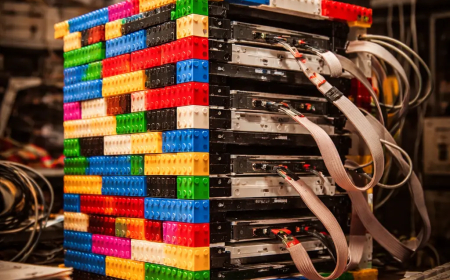

When we observe the monitors in our control centers at GoBooksy, we don't just see numbers scrolling by; we see an uninterrupted flow of human and mechanical activity translating into digital weight. The often-cited figure of 2.5 quintillion bytes generated every day is hard for the human mind to visualize, but for us managing infrastructure, this number has a very precise physical consistency. It means heat dissipated by servers, increasing energy demands, and the continuous need to expand storage spaces that fill up at a speed unprecedented in the history of technology.

The most shocking fact isn't so much the current volume, but the speed at which we arrived here. Knowing that 90% of all data existing in the world today was created in just the last two years forces us to completely revise our approach to system design. We are not witnessing linear growth, but an exponential explosion that renders old preservation paradigms obsolete. In our daily projects, we notice how storage planning, which used to be done on an annual basis, now requires almost monthly flexibility to avoid risking the collapse of available resources.

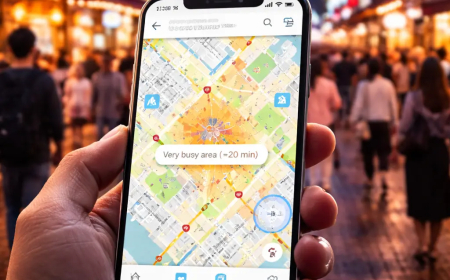

People often make the mistake of thinking this mass of data comes exclusively from conscious user actions, like sending emails, uploading 4K videos, or social network interactions. The operational reality we face at GoBooksy tells a different story. A huge portion of these quintillion bytes is generated by machines talking to other machines. Industrial IoT sensors, system logs recording every micro-event on a server, automated backups, and hidden metadata behind every file constitute a deafening and voluminous "background noise." This invisible traffic is essential for the modern network's functioning, but it occupies real space on real disks, posing enormous challenges in terms of indexing and information retrieval.

The direct consequence of this hyper-production is the phenomenon we observe increasingly often in the companies we collaborate with: the accumulation of "Dark Data." This involves information that is collected, processed, and archived but will never be used again for strategic or decision-making purposes. Keeping this data alive has a high economic and environmental cost. We see infrastructures slowed down by terabytes of duplicate or obsolete files, which complicate backup operations and make systems less responsive. The challenge today is no longer how to store everything, but understanding what has value and what can be let go.

The impact of this acceleration is also reflected in security and privacy. The more data is created, the wider the attack surface becomes and the more complex compliance management gets. Every generated byte carries a responsibility for protection. In the workflows we structure at GoBooksy, attention has shifted from simple accumulation capacity to intelligence in data life cycle management. It is not enough to have infinite space in the cloud; one needs the ability to govern information, distinguishing the useful signal from the background noise clogging digital highways.

We must begin to consider data production not as an infinite and free resource, but as matter with specific weight. The ease with which we create digital content has habituated us not to reflect on its long-term impact. Every time an automated system saves a file version or a user syncs a device, a chain of complex physical and energy processes is triggered. Understanding the real scale of these 2.5 quintillion bytes daily is the first step in moving from a culture of indiscriminate accumulation to a strategy of digital efficiency, where information quality finally prevails over its immeasurable quantity.